Hiring intelligence: end-to-end experience

Role: UX leader

Contributors: Product, UX designers, organizational leaders

Timeline: 6 months

Results: Coordinated roadmap with executive buy-in

Candidate quality is a perennial complaint from employers on Indeed, especially when hiring for roles like nurses or truck drivers who require specialized licenses and certifications.

This is such an important problem to solve that Indeed sought to monetize improvements to this process.

Hiring Intelligence brings together the groups that capture job requirements from employers, screen candidates during the application process, and represent the results back to employers (which we call explainability).

Understanding our users

“I paid hundreds of dollars for people that when I talk to them, they’re like, no, I’m not certified, but I’m willing to get it.

It’s like, you’re not qualified for this job.

And it’s […] super time consuming and frustrating.”

— Carrie

What does Carrie need?

Carrie became the symbolic representative of employers hiring roles that require certifications or licenses. We were constantly asking ourselves - would this help Carrie?

Confidence in the reliability and accuracy of candidate’s application data.

Which means that Indeed needs:

High quality job posts

Clearly communicate requirements and preferences for the role.More sophisticated screening methods

Effective and equitable screening methods to evaluate job seeker qualifications.Evidence to explain the results

Demonstrate that candidates meet the job’s requirements.

01 High quality job posts

Employers like Carrie spend a lot of time agonizing over the details in their job post, oftentimes going through several rounds of revisions with collaborators. Let’s leverage her work to extract job requirements into structured data for better matching and default screening methods. Over time, as our models get better and we see that employers no longer need to edit the extractions, we can make the extractions a less prominent part of the experience.

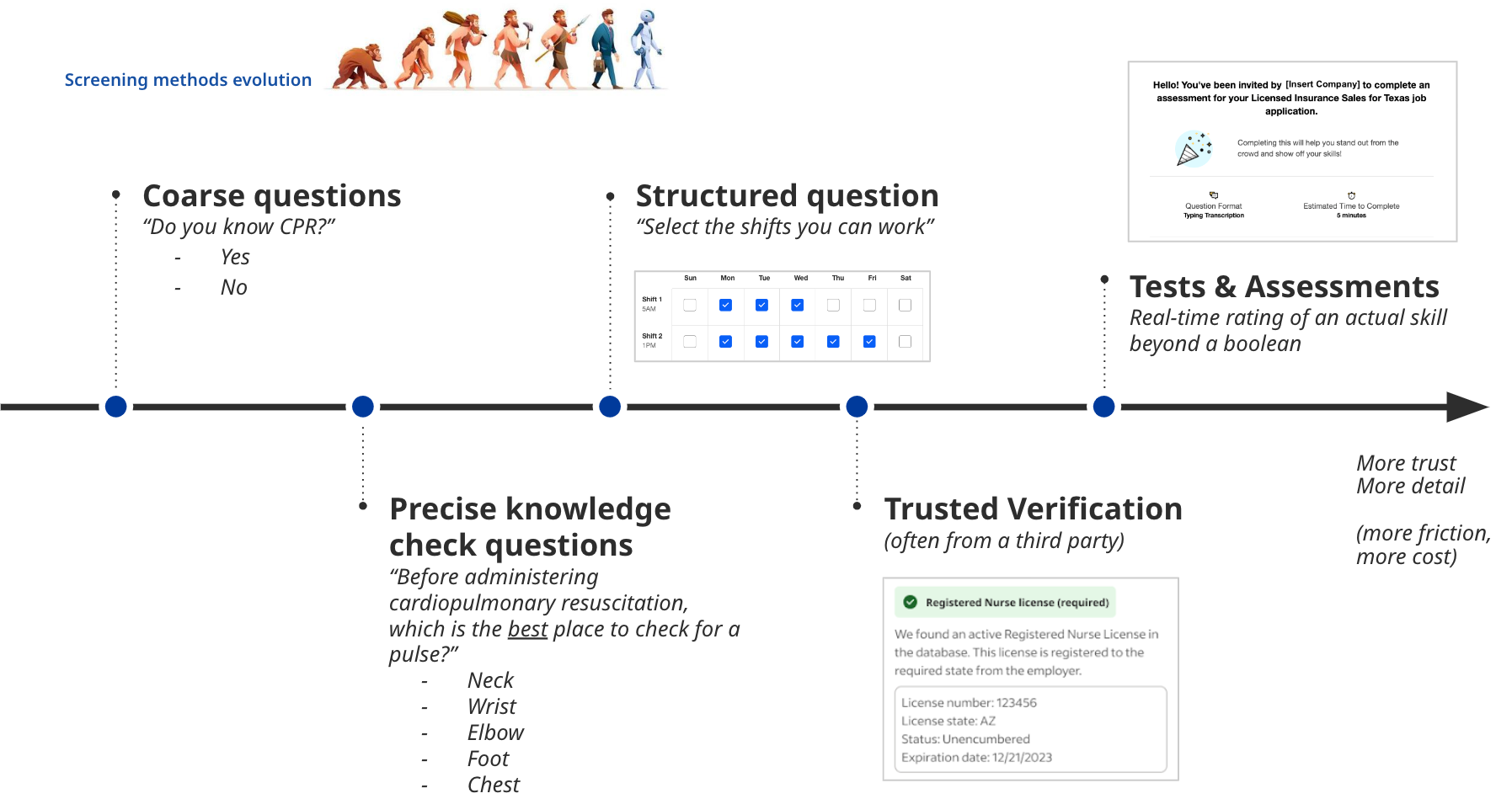

02 More sophisticated screening methods

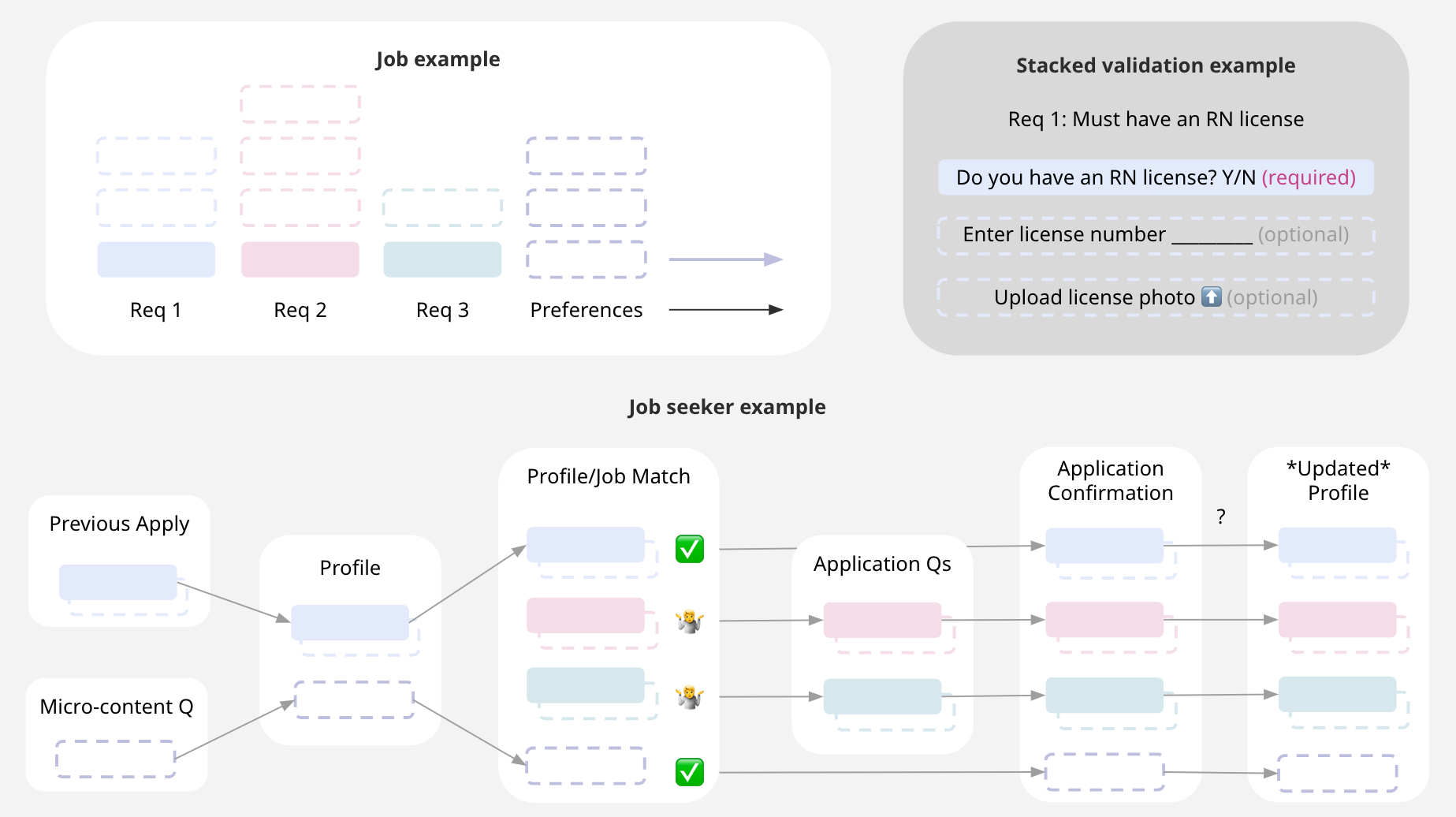

The current approach of asking job seekers Yes/No questions during the apply process and expecting those answers to be 100% truthful is problematic. As UX practitioners, we would rarely ask for user feedback in this way. Instead, we might ask more open-ended questions that would allow us to interpret the answers. Additionally, for critical questions, we would likely ask the question in multiple ways and triangulate the responses.

When we ask questions might also affect the responses. For example, job seekers are likely to tailor their responses during the apply process to a specific job and that makes sense. But if we want to get a well-rounded understanding of the job seeker, the full breadth of their experiences and how they might match any job in the future, we should consider additional surfaces to engage job seekers and collect information. After collecting the information once, we can save it to their profile and avoid asking multiple times, so job seekers gain efficiencies as they use Indeed.

03 Evidence to explain the results

Once we collect the job requirements and preferences, screen candidates with more sophisticated methods, sometimes using multiple methods to collect evidence for critical requirements, we want to represent all of that high quality information back to employers so they feel confident in the reliability and accuracy of candidate’s application data.

This is the “Ah-ha” moment for Carrie.

Hypothesis for testing

We think if we…

improve job quality

offer compelling and effective screening packages

show evidence that candidates meet

… we’ll see POVR go up and Ask’Em complaints go down.

Q1 incremental feature delivery

Build models to extract requirements for job descriptions

Add support for new question types in screening

Collect licenses from job seekers during the apply

Highlight requirements for employers on candidate profiles